Microservices with minimum overhead using ASP.NET Web API and Azure – part 2 – Deployment

- Processes, standards and quality

- Technologies

- Others

This is the second one of two posts concerning approach to build cost effective, but prepared for scaling, systems using ASP.NET Web API and Azure.

If you haven’t read the first part, I strongly recommend to do so.

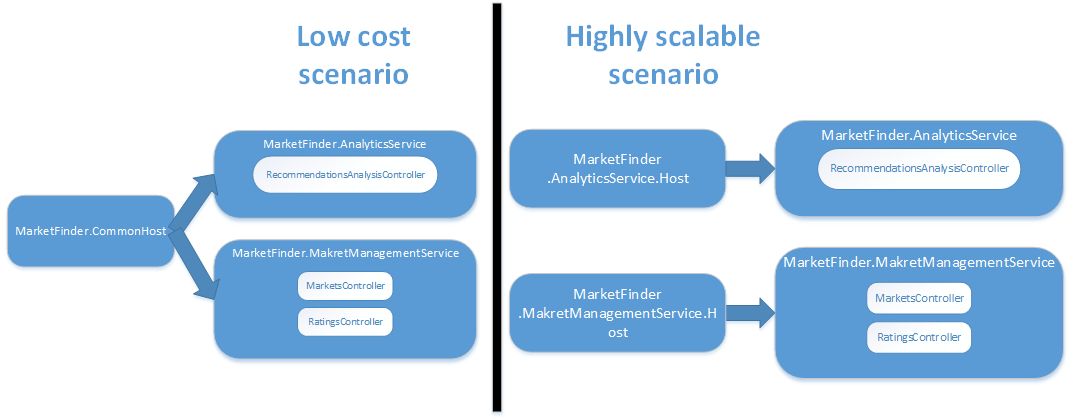

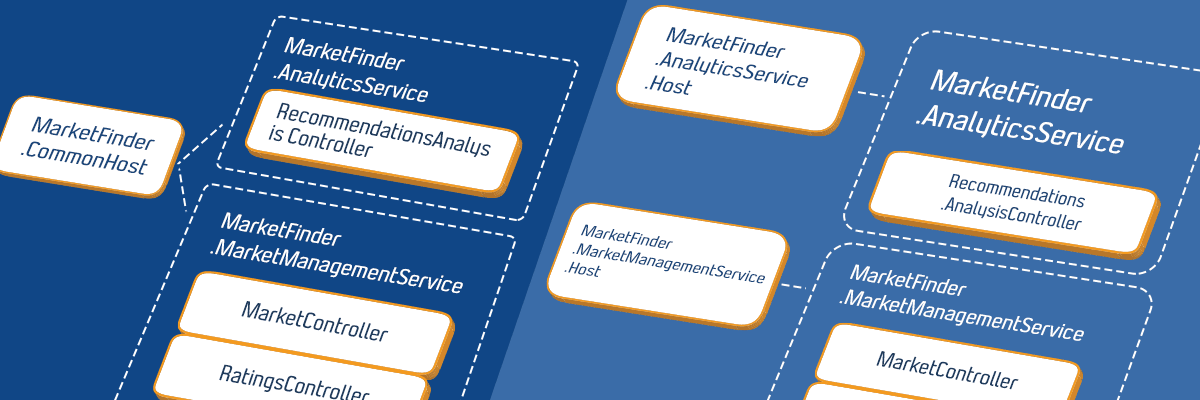

Just to remind, my goal is to create environment that lets us build fast, but build a system that can be easily scaled in the future. Last week, I’ve proposed architecture of .NET service oriented system, which can be both hosted on a single machine and easily spread on multiple microservices. In short, it consists of multiple .NET class libraries containing ASP.NET MVC (Web API) controllers. They can be hosted just by referencing them from one or multiple Web Application projects.

Below, a draft presenting both scenarios:

This week I’m going to focus on how to use Azure PaaS level services to leverage goal of building system fast.

Show me what you got – deployment

We all know it is important to deliver pieces of software to product owners and stakeholders as soon as possible so they can see if we are building what they really need. For this reason we want to setup environment for deployments quickly. Time spent on configuring and managing servers is a waste here. Instead, we can deploy system to one of Platform as a Service solutions giving us a lot of setup out of the box. With them, you can really quickly configure platform that runs application without bothering you with machine performance, security, operating system, server management etc.

- Create Azure SQL database

- Go to portal.azure.com,

- Select New -> Data + Storage -> SQL Database,

- Enter DB name, select or create new server – this is only for authorization purpose,

- Select Pricing tier – basic is sufficient for this moment,

- Create resource group (you should create one new group for all services associated with particular system),

- Use CreateDatabaseTables.sql script from repository to create its structure – initially this can be done manually, however we will soon automate this process to make sure, DB is always up to date with code.

- Create new Web App

- Select New -> Web + Mobile -> Web App,

- Enter name, resource group (one created with database),

- Select pricing plan. For sake of early testing and publishing application to stakeholders, free or shared plan is perfect. You can scale it up in seconds, at any time.

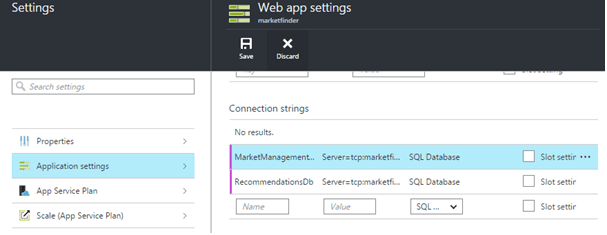

- Make them work together. We are going to configure Web App to use created SQL Database by overriding web.config settings. This Web Apps feature lets us avoid storing environment dependent keys and passwords in source code repository

- Go to created SQL Database blade,

- Click “Show database connection string”,

- Copy ADO.NET connection string,

- Go to created Web App blade,

- Select Settings, then Application Settings, scroll down to Connection Strings section and paste database connection string. Do not forget to fill it with password to your server. Azure can’t get it for you because it is hashed.

- Create Azure SQL database

- Configure continuous deployment

- Go to Web App blade,

- In Deployment section click “Set up continuous deployment”,

- Follow wizard to setup deployment of your choice. In my case, I login to Github, select project linked above and select master branch. You can try this by forking my Github project.

- Every time we deploy something to master branch, it is automatically deployed to Web App,

- If the above solution do not fit your process, Web App can be configured to wait until we explicitly push source code into it.

Voilà, our application is up and running.

Going live – production environment

Created environment is good for development and testing purposes, however before we ship it to public we should care about more aspects. Let’s list some of them, and see what we need to achieve with and without Azure PaaS services.

[table id=14 /]

Time for scaling

Everybody wishes to be successful with their application. However, when this happens, one can realize he cannot handle such popularity. Let’s see how we are prepared to scale.

Scale using Azure capabilities

The simplest thing you can do is to scale up, to do so just change pricing plan for service to the higher one. For most PaaS services it takes just few seconds and does not cause service downtime. However, this approach is limited to what machine behind the highest pricing plan can handle.

Another option is scaling out. That means to create multiple instances of our service which are automatically load balanced. To do this we can simply move slider in Azure Portal, or better, configure rules for autoscaling. Typical ruleset will increase number of service instances when average CPU utilization is higher than, for example 50% for 10min, and decrease it when it is lower than 20% for 20min.

There is one important thing to remember. To scale out your application must be stateless, all states need to be moved to external store, like SQL Database of Redis cache. It is good to keep this in the back of your head since the beginning of project development.

Scale by breaking code on services

At some point, the above solution might be insufficient. For example, your SQL Database does not handle traffic, even at the highest pricing plan. Or, simply one component is using a lot of CPU at some point in time and we do not want the whole application to suffer because of this.

Architecture proposed in the previous post lets us simply extract number of components of the system to independent hosts. Then, we can deploy them to independent Azure Web App and SQL databases.

Optimizing costs

When our system is getting more traffic, the entire environment can cost quite a sum of money every month. It is hard to anticipate whether it is cheaper to host system on a small number of big services, or on a lot of small ones. With proposed architecture, it is relatively easy to create both configuration, perform tests and look for cost optimum setup for particular system.

Another important aspect of cloud, is that you can easily scale or even shut down services when they are not used. This can be easily automated, and in some cases can cut our costs by more than half. So even though Azure or AWS services might be more expensive than local datacenters, having mentioned flexibility you can pay only for traffic you actually get, not just for being ready to handle a lot of it.

Summary

In this article I’ve tried to present how we can use PaaS level services available in Azure, to both build MVP fast, and meet requirements of live systems when we get to this stage. When buying such services from Microsoft, we are saving time for managing servers and network. Additionally, we are able to achieve system high availability, scalability, security and monitoring without hiring army of Ops.

I’ve briefly described just a couple of services available in Azure, however there are way more of them; just to mention some: Storage, Document DB, Azure Search, Mobile Apps, Logic Apps, Machine Learning. You can really solve most of your problems without leaving comfort of sold as a service platforms.